Today we are pleased to announce that NGINX Plus R12 is available as a free upgrade for all NGINX Plus subscribers. NGINX Plus is a high‑performance software application delivery platform that includes a load balancer, content cache, and web server. NGINX Plus R12 is a significant release with new features focused on clustering, customization, and monitoring.

To learn more about NGINX Plus R12, watch our on‑demand webinar.

Enterprise users will benefit from the new clustering feature, which simplifies the process of managing highly available clusters of NGINX Plus servers. All users will benefit from official support for nginScript, a lightweight and high‑performance scripting language that is embedded directly into the NGINX configuration. Improvements in monitoring and instrumentation, caching, and health checks improve the reliability and performance of applications.

- Configuration sharing – A supported configuration sharing script enables you to reliably push configuration from a master NGINX Plus instance to a set of peers. The process performs backups and verifies that the configuration is valid on each remote peer before committing the configuration and reloading the peer’s

nginxprocess. - nginScript general availability – nginScript enables you to create sophisticated traffic‑handling solutions with NGINX Plus using familiar JavaScript syntax. nginScript code can be embedded in the NGINX Plus configuration to perform custom actions on HTTP, TCP, and UDP traffic.

- New statistics – Visibility is key to maintaining healthy and high‑performing applications. NGINX Plus R12 provides greater visibility into application performance with additional metrics including server response times, shared memory zone utilization, and error codes for TCP/UDP services. View the new statistics on the built‑in live activity monitoring dashboard or export them in JSON format to your favorite monitoring tool.

- Enhanced caching – Get even better performance from your cache. NGINX Plus R12 supports the

stale-while-revalidateandstale-if-errorcache extensions defined in RFC 5861. Cache revalidation can be done in the background so that users do not have to wait for the round trip to the origin server to complete. - Health check improvements – When new servers are added to a load‑balancing pool using the on‑the‑fly reconfiguration API or DNS, NGINX Plus can delay sending them traffic until the they pass the regular configured health check, and then apply the slow‑start feature to ramp up traffic to them. Also, a new, simpler health check for UDP makes it easier to deploy reliable UDP services to your users.

Further enhancements provide client certificate authentication for TCP services, the ability to inspect custom fields in OAuth JWTs, support for the WebP format in the Image-Filter module, and a number of performance and stability improvements.

We encourage all subscribers to update to NGINX Plus R12 right away to take advantage of the performance, functionality, and reliability improvements in this release. Before you perform the update, be sure to review the changes in behavior listed in the next section.

Changes in Behavior

NGINX Plus R12 introduces some changes to default behavior and NGINX Plus internals that you need to be aware of when upgrading:

- Cache metadata format – The format of the internal cache metadata header has changed. When you upgrade to NGINX Plus R12, the on‑disk cache becomes invalid and NGINX Plus automatically refreshes the cache as needed. Old cache entries are automatically deleted.

- SSL “DN” variables – The format of the

$ssl_client_s_dnand$ssl_client_i_dnvariables has changed. The comma (,) now serves as the field separator instead of the forward slash (/) and is escaped as per RFCs 2253 and 4514. To continue using theX509_NAME_onelineformat with the forward‑slash field separator, use the$ssl_client_s_dn_legacyand$ssl_client_i_dn_legacyvariables. - Maximum connections queue – The

queuedirective tells NGINX Plus to queue connections to upstream servers if they are overloaded. As a consequence of memory and performance optimizations in R12, thequeuedirective must now appear in theupstreamblock below the directive that specifies the load‑balancing algorithm (hash,ip_hash,least_conn, orleast_time). - Third‑party dynamic modules – Dynamic modules authored or certified by NGINX, Inc. are provided in our repository. Any third‑party modules must be recompiled against open source NGINX version 1.11.10 to continue working with NGINX Plus R12. Please refer to the NGINX Plus Admin Guide for more information.

- Final release for end‑of‑life OS versions – Ubuntu has announced end‑of‑life for Ubuntu 12.04 LTS, and Red Hat has announced end‑of‑life for Production Phase support of Red Hat Enterprise Linux 5.10+, both effective March 31, 2017. NGINX Plus R12 is the last release that will include packages for, and support, these OS versions, as well as CentOS 5.10+ and Oracle Linux 5.10+. To continue receiving updates and support after NGINX Plus R12, update to a supported OS version.

NGINX Plus R12 Features In Detail

Configuration Sharing

NGINX Plus servers are often deployed in a cluster of two or more instances for high availability and scalability purposes. This enables you to improve the reliability of your services by insulating them from the impact of server failure, and also to scale out and handle large volumes of traffic.

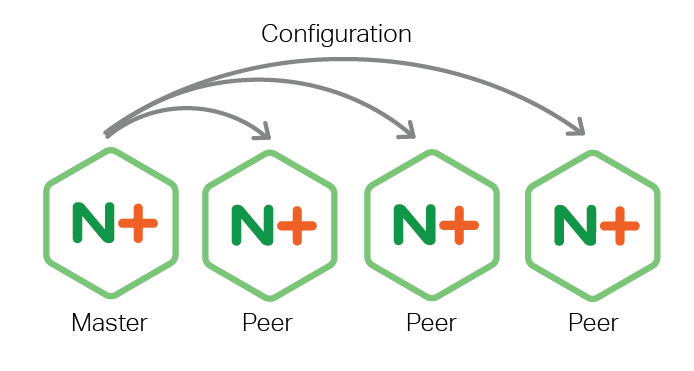

NGINX Plus already offers a supported way to distribute traffic across a cluster in either active‑passive or active‑active fashion. NGINX Plus R12 adds a further supported method of synchronizing configuration across a cluster. This configuration synchronization feature allows an administrator to configure a “cluster” of NGINX Plus servers from a single location. These servers share a common subset of their configuration.

Here’s how you perform configuration sharing:

- Nominate one or more “master” nodes in the cluster.

- Install the new nginx-sync package on the master nodes.

- Apply configuration changes to a master node.

-

Invoke the configuration sync process,

nginx-sync.sh, which is included in the nginx-sync package, to update each of the other servers in the cluster (the peers). The sync process performs these steps on each peer:- Pushes the updated configuration to the peer over

sshorrsync. - Verifies that the configuration is valid for the peer, and rolls it back if not.

- Applies the configuration if it is valid, and reloads the NGINX Plus servers on the peer.

- Pushes the updated configuration to the peer over

This capability is especially helpful when NGINX Plus is deployed in a fault‑tolerant pair (or larger number) of servers. You can use this method to simplify the reliable deployment of configuration within a cluster, or to push configuration from a staging server to a cluster of production servers.

For detailed instructions, see the NGINX Plus Admin Guide.

nginScript General Availability

With NGINX Plus R12 we are pleased to announce that nginScript is now generally available as a stable module for both open source NGINX and NGINX Plus. nginScript is fully supported for NGINX Plus subscribers.

nginScript is a unique JavaScript implementation for NGINX and NGINX Plus, designed specifically for server‑side use cases and per‑request processing. It enables you to extend NGINX Plus configuration syntax with JavaScript code in order to implement sophisticated configuration solutions.

With nginScript you can log custom variables using complex logic, control upstream selection, implement load‑balancing algorithms, customize session persistence, and even implement simple web services. We’ll be publishing full instructions for some of these solutions on our blog in the next few weeks, and we’ll add links to them here as they become available.

Specifically, NGINX Plus R12 includes the following enhancements:

- Preread phase in the Stream module

- ECMAScript 6 Math methods and constants

- Additional String methods such as

endsWith,includes,repeat,startsWith, andtrim - Scopes

Although nginScript is stable, work continues to support even more use cases and JavaScript language coverage. Learn more in Introduction to nginScript on our blog.

New Statistics

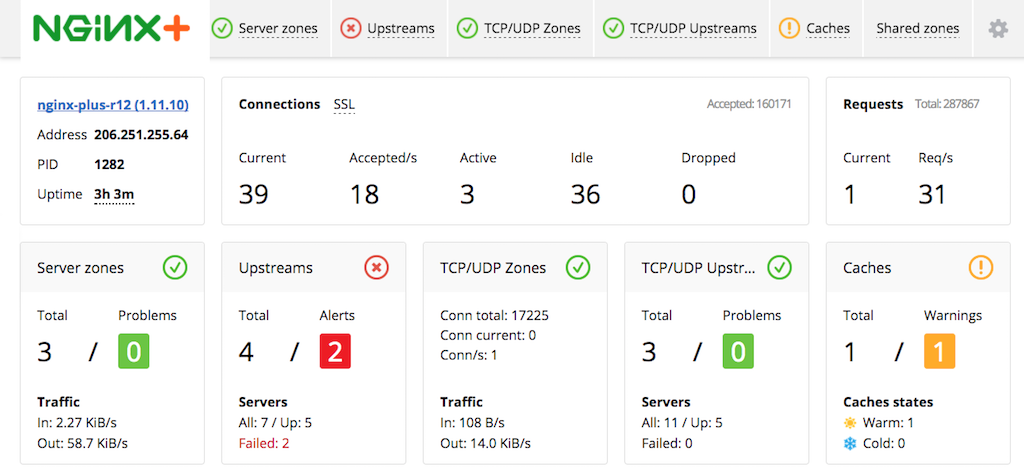

The monitoring and instrumentation in NGINX Plus is an important value‑add that gives you deep insights into the performance and behavior of both NGINX Plus and upstream applications. Statistics are provided both through our built‑in graphical dashboard as well as in JSON format that can be exported to your favorite monitoring tool.

NGINX Plus R12 adds a number of improvements: more information about the performance (response time) of load‑balanced servers, insights into the operation of TCP and UDP services, and a range of internal data that assists in troubleshooting to identify issues and tuning NGINX Plus to optimize performance.

New statistics in NGINX Plus R12 include:

- Upstream server response time – In NGINX Plus R11 and earlier, response time from upstream servers was calculated only when the Least Time load‑balancing algorithm was used. Starting in NGINX Plus R12, response time is measured for all algorithms and reported both in the JSON data and on the built‑in dashboard.

- Response codes for TCP/UDP upstreams in the graphical dashboard – The Stream module for TCP/UDP load balancing generates pseudo status codes to identify how a connection was closed (

200means “success”,502means “upstream unavailable”, and so on), reporting them in the$statusvariable. NGINX Plus R12 adds a series of columns to the dashboard to display the counts of response codes for TCP and UDP virtual servers, like those already provided for HTTP virtual servers. The pseudo status codes can also be logged for integration with existing log‑analysis tools. - NGINX Plus version information – To help with troubleshooting, the JSON data now reports the NGINX Plus release number as

nginx_build(for example,nginx-plus-r12) as well as the open source NGINX version number asnginx_version(for example,1.11.10). The dashboard displays both values in the top left box on the main tab. -

Upstream hostname – In the JSON data, the

serverfield identifies a server in an upstream group by IP address and port. The newnamefield reports the first parameter to theserverdirective in theupstreamconfiguration block, which can be a domain name or UNIX‑domain socket path as well as an IP address and port. On the dashboard, the leftmost Name column on the Upstreams and TCP/UDP Upstreams tabs now reports the value from thenamefield instead of theserverfield (which was reported in NGINX Plus R11 and earlier).The new metric makes it easier to correlate the JSON data and dashboard with your upstream servers if you define them using DNS names, particularly names that resolve to multiple IP addresses.

-

Shared memory zone utilization in extended status data – Shared memory zones are configured with a fixed size, and it can be difficult to select a size that is neither too big (memory is allocated but never used) nor too small (memory is exhausted and cached items are discarded).

New instrumentation in the extended status data provides a detailed internal report of the memory usage of each shared zone, enabling you to monitor memory usage and NGINX technical support to provide more informed advice on optimizing NGINX configuration.

- JSON‑compliant escaping in the NGINX Plus access log – Some modern log‑analysis tools can efficiently ingest JSON‑formatted log files, and are able to draw insights more easily than they can from unformatted log files. You can define a JSON template in the standard NGINX Plus

log_formatdirective, and the new optionalescapeparameter to the directive enforces JSON‑compliant escaping so that log lines are correctly formatted.

For more details on extended status monitoring, please see this page.

Enhanced Caching

NGINX Plus R12 significantly enhances the NGINX Plus caching engine.

Serve Stale Content while Updating

NGINX Plus R12 adds support for the Cache-Control extensions defined in RFC 5861, stale-while-revalidate and stale-if-error. You can now configure NGINX Plus to honor these Cache-Control extensions and continue to serve expired resources from cache while refreshing them in the background, without adding latency to the client request. Similarly, NGINX Plus can serve expired resources from cache if the upstream server is unavailable – a technique for implementing circuit breaker patterns in microservices applications.

Unless it is configured to ignore the Cache-Control header, NGINX Plus honors the stale timers from origin servers that return RFC 5861‑compliant Cache-Control headers, as in this example:

Cache-Control: max-age=3600 stale-while-revalidate=120 stale-if-error=900To enable support for the Cache-Control extensions with background refreshing, include the highlighted directives:

proxy_cache_path /path/to/cache levels=1:2 keys_zone=my_cache:10m max_size=10g inactive=60m use_temp_path=off;

server {

# ...

location / {

proxy_cache my_cache;

# Serve stale content when updating

proxy_cache_use_stale updating;

# In addition, don’t block the first request that triggers the update

# and do the update in the background

proxy_cache_background_update on;

proxy_pass http://my_upstream;

}

}The proxy_cache_use_stale updating directive instructs NGINX Plus to serve the stale version of content while it’s being updated. If you include only that directive, the first user to request stale content pays the “cache miss” penalty – that is, doesn’t receive the content until NGINX Plus has fetched it from the origin server and cached it. Users who subsequently request the stale content while it is being updated get it from the cache immediately, while the first user gets nothing until the updated content is fetched and cached.

With the new proxy_cache_background_update directive, all users, including the first user, are served the stale content while NGINX Plus refreshes it in the background.

The new functionality is also available in the FastCGI, SCGI, and uwsgi modules.

Byte‑Range Requests

A further enhancement to the caching engine enables NGINX Plus to bypass the cache for byte‑range requests that start more than a configured number of bytes after the start of an uncached file. This means that for large files such as video content, requests for a byte range deep into the file do not add latency to the client request. In earlier versions of NGINX Plus, the client didn’t receive the requested byte range until NGINX Plus fetched all content from the start of the file through the requested byte range, and wrote it to the cache.

The new proxy_cache_max_range_offset directive specifies the offset from the beginning of the file past which NGINX Plus passes a byte‑range request directly to the origin server and doesn’t cache the response. (The new functionality is also available in the FastCGI, SCGI, and uwsgi modules.)

proxy_cache_path /path/to/cache levels=1:2 keys_zone=my_cache:10m max_size=10g inactive=60m use_temp_path=off;

server {

location / {

proxy_cache my_cache;

# Bypass cache for byte range requests beyond 10 megabytes

proxy_cache_max_range_offset 10m;

proxy_pass http://my_upstream;

}

}These enhancements further cement NGINX Plus as a high‑performance, standards‑compliant caching engine – suitable for any environment, from accelerating application delivery to building fully fledged content delivery networks (CDNs).

Improved Health Checks

NGINX Plus R12 further enhances the active health check capabilities of NGINX Plus.

Health Check on Newly Added Servers

With the increasing adoption of containerized environments and autoscaled groups of applications, it is essential that the load balancer implements proactive, application‑level health checks and allows new servers time to initialize internal resources before they come up to speed.

Prior to NGINX Plus R12, when you added a new server to the load‑balancing pool (using the NGINX Plus on‑the‑fly reconfiguration API or DNS), NGINX Plus considered it healthy right away and sent traffic to it immediately. When you include the new mandatory parameter to the health_check directive (HTTP or TCP/UDP), the new server must pass the configured health check before NGINX Plus sends traffic to it. When combined with the slow‑start feature, the new parameter gives new servers even more time to connect to databases and “warm up” before being asked to handle their full share of traffic.

upstream my_upstream {

zone my_upstream 64k;

server backend1.example.com slow_start=30s;

}

server {

location / {

proxy_pass http://my_upstream;

# Require new server to pass health check before receiving traffic

health_check mandatory;

}

}Here both the mandatory parameter to the health_check directive and the slow_start parameter to the server directive (HTTP or TCP/UDP) are included. Servers that are added to the upstream group using the API or DNS interfaces are marked as unhealthy and receive no traffic until they pass the health check; at that point they start receiving a gradually increasing amount of traffic over a span of 30 seconds.

Zero Config UDP Health Check

It is just as important to implement application‑level health checks for UDP servers as for HTTP and TCP servers, so that production traffic is not sent to servers that are not fully functional. NGINX Plus supports active health checks for UDP, but prior to NGINX Plus R12 you had to create a match block that defined the data to send and the response to expect. It can be challenging to determine the proper values when using binary protocols or those with complex handshakes. For such applications, NGINX Plus R12 now supports a “zero config” health check for UDP that tests for application availability without requiring you to define send and expect strings. Add the udp parameter to the health_check directive for a basic UDP health check.

upstream udp_app {

server backend1.example.com:1234;

server backend2.example.com:1234;

}

server {

listen 1234 udp;

proxy_pass udp_app;

# Basic UDP health check

health_check udp;

}SSL Updates – Client Certificates for TCP Services, Updated Variables

SSL client certificates are commonly used to authenticate users to protected websites. NGINX Plus R12 adds authentication support for SSL‑protected TCP services to the existing HTTP support. This is typically used for machine‑to‑machine authentication, as opposed to end‑user login, and enables you to use NGINX Plus for both SSL termination and client authentication when load balancing Layer 4 protocols. Examples include authenticating IoT devices for communication with the MQTT protocol.

The $ssl_client_verify variable now includes additional information for failed client authentication events. This includes reasons such as “revoked” and “expired” certificates.

The format of the $ssl_client_i_dn and $ssl_client_s_dn variables has been changed to comply with RFCs 2253 and 4514. For more details, see Changes in Behavior.

Enhanced JWT Validation

NGINX Plus R10 introduced native JSON Web Token (JWT) support for OAuth 2.0 and OpenID Connect use cases. One of the primary use cases is for NGINX Plus to validate a JWT, inspect the fields in it, and pass them to the backend application as HTTP headers. This allows applications to easily participate in an OAuth 2.0 single sign‑on (SSO) environment by offloading the token validation to NGINX Plus and consuming user identity by reading HTTP headers.

NGINX Plus R10 and later can inspect the fields defined in the JWT specification. NGINX Plus R12 extends JWT support so that any field in a JWT (including custom fields) can be accessed as an NGINX variable and therefore proxied, logged, or used to make authorization decisions.

Upgrade or Try NGINX Plus

If you’re running NGINX Plus, we strongly encourage you to upgrade to Release 12 as soon as possible. You’ll pick up a number of fixes and improvements, and it will help us to help you if you need to raise a support ticket. Installation and upgrade instructions can be found at the customer portal.

Please refer to the changes in behavior described above before proceeding with the upgrade.

If you’ve not tried NGINX Plus, we encourage you to try it out for web acceleration, load balancing, and application delivery, or as a fully supported web server with enhanced monitoring and management APIs. You can get started for free today with a 30‑day evaluation and see for yourself how NGINX Plus can help you deliver and scale out your applications.